OpenGL Renderer Design

Lately I’ve been writing lots of OpenGL programs for course projects and for-fun rendering side-projects. I’ve started to notice an overall design that works pretty well for these kinds of projects, and I’m going to document it here.

This design matches closely the idea of “Model-view-viewmodel” aka. “MVVM”. If you’re unfamiliar with this term, be aware that the words “model” and “view” here aren’t talking about 3D models or cameras or transformation matrices. MVVM is an object-oriented architectural pattern, similar to MVC (“Model view controller”). MVVM maps to the design of a renderer as follows:

- Model: The scene which the renderer should render.

- View: The renderer that traverses the scene and displays it.

- View model: The data the renderer uses internally to render the scene.

Another important inspiration of the design in this article is the relational database. While I don’t use an actual relational database like SQL in my projects, I think the principles of relational databases are useful to reason formally about designing the model in the MVVM, so I’ll talk a bit about that in this article too.

In my projects, the three parts of MVVM are split into two C++ classes: “Scene”, and “Renderer”. In short, the Scene stores the data to render, and the Renderer reads the Scene to render it. In this article I’ll first talk about how I design the Scene, and then I’ll talk about how I design the Renderer.

Scene

The “Scene” class is very bare-bones, it contains no member functions whatsoever. Treat this class like a database: It has a bunch of tables in it, the tables contain data that possibly refer to other tables in the database, you can read/write the objects in the tables, and you can insert or erase elements from tables.

In a typical renderer, you might have a table of all the materials, a table of all the meshes, a table of all the instances of meshes, a table of all the cameras, and etc. The specifics of these tables depends on your project, so if you’re doing a skeletal animation project you would also have a table of the skeletons, instances of skeletons, and so on.

I found that designing the schema of the database is a big part of the requirements gathering phase for a project. Once you nail the design of the schema, the code you have to write to manage your data becomes more obvious (“I just need to hook up all this information”). Later modifications to your code can be done by figuring out how your schema needs to change, which is made easier since the relationships between your objects lay bare all together in one place.

In practice, I implement the tables of the database as C++ containers. This allows me to use containers with the access and update patterns I need for the project I’m working on. For example, I might want the objects in a table to be contiguous in memory, or do insertion/deletion of objects in arbitrary order, or be able to efficiently lookup an object by its name.

The design of the Scene class involves many concepts from relational database design. Let’s take some time to consider how primary keys, foreign keys, and indexing apply to the design of the Scene.

Primary Keys and Foreign Keys

Table usually have a “primary key”, which can be used to identify each object in the table uniquely, and can also be used to efficiently lookup the object. When an object in one table wants to refer to an object in another table, it can do so by keeping a copy of the primary key of the referred object, a concept also known as a “foreign key”.

An example of this situation is a mesh instance keeping a reference to the mesh associated to it, and keeping a reference to the transform used to place the instance in the world. Each mesh instance would then have a foreign key to the mesh table, and a foreign key to the transform table.

Primary keys and foreign keys can be as simple as integer IDs, though some care is required if you want to avoid foreign keys becoming invalid when modifications are done on the table they refer to. For example, if you decide to use a std::vector as the container for a table, erasing an element from the vector might cause objects to get shifted around, which will invalidate foreign keys referring to them.

In order to support insertion and deletion, I use a container I call “packed_freelist”, based on bitsquid’s ID lookup table. This container gives each object an ID that won’t change as elements are inserted and deleted, and allows efficient lookup of objects from their ID. The packed_freelist also automatically packs all objects in it to be contiguous in memory, which is desirable for efficient traversal.

Another way to implement foreign keys is to update the foreign keys when their pointed-to table is modified, which might be automated with a more sophisticated scene database system that is aware of foreign key relationships. On the simpler side of the spectrum, if you know that a table will never change after initialization, or if you only support insertion through push_back(), you can be sure that your foreign keys will never be invalidated with zero extra effort. You might also be able to use a C++ container that supports inserting/erasing elements without breaking pointers, like std::set, then using pointers to those elements to refer to other tables.

There exists a lot of formalism about primary keys and foreign keys in the world of relational databases. You might be interested to explore this topic further if you’re interested in exploring the limits of this concept.

Indexing

It’s nice to have primary keys and all, but sometimes you also want to lookup objects based on other attributes. For example, you might want to lookup all meshes with the name “crate”. In the worst case, you can iterate through all the meshes looking for it, which is not a big deal if you do this search infrequently. However, if you do this search often, you might want to accelerate it.

In database-speak, accelerating lookups is done by building an index on a table. You can store the table itself in a way that allows efficient lookups, like sorting all meshes by their name, which would allow binary searching. Storing the table itself in a sorted order is known as building a clustered index. Naturally, you can only have one clustered index per table, since you can’t keep a table sorted by two unrelated things at the same time. By default, your tables should support efficient lookup from their primary key.

If you want to have many indexes on a table, you can store an acceleration structure outside the table. For example, I could store a multimap alongside the table of meshes that lets me lookup mesh IDs by name in logarithmic time. The trouble is that every index needs to be kept in sync with the table, meaning that every additional non-clustered index adds further performance cost to insertions and deletions.

The Code

To give you an idea of what this looks like, I’ll show you some examples of Scene setups in my projects, with varying amount of adherence to the principles I explained so far in this article.

Exhibit A: “DoF”

I did a project recently where I wanted to implement real-time depth-of-field using summed area tables. The code for scene.h is at the bottom of this section of this blog post.

If you look at the code, you’ll see I was lazy and basically just hooked everything together using packed_freelists. I also defined two utility functions for adding stuff to the Scene, namely a function to add a mesh to the scene from an obj file, and a function to add a new instance of a mesh. The functions return the primary keys of the objects they added, which lets me make further modifications to the object after adding it, like setting the transform of a mesh instance after adding it.

You can identify the foreign keys by the uint32_t “ID” variables, such as MeshID and TransformID. Beyond maybe overusing packed_freelist, the relationships between the tables is mostly obvious to navigate. You can see I was lazy and didn’t even implement removing elements, and didn’t even write a destructor that cleans up the OpenGL objects.

Not deleting OpenGL objects is something I do often out of laziness, and it barely matters because everything gets garbage collected when you destroy the OpenGL context anyways, so I would actually recommend that approach for dealing with any OpenGL resources that stay around for the lifetime of your whole program. If you want to be a stickler for correctness, I’ve had success using shared_ptr, using shared_ptr as follows:

shared_ptr<GLuint> pBuffer(

new GLuint(),

[](GLuint* p){ glDeleteBuffers(1, p); });

glGenBuffers(1, pBuffer.get());

A small extension to this is to wrap the shared_ptr in a small class that makes get() return a GLuint, and adds a new member function “get_address_of()” that returns a GLuint*. You can then use get() for your normal OpenGL calls, and get_address_of() for functions like glGenBuffers and glDeleteBuffers. This is very similar to how ComPtr is used in D3D programs (with a very similar interface: Get() and GetAddressOf()).

This shared_ptr thing is not the most efficient approach, but it’s straightforward standard C++, and it saves you the trouble of defining a bunch of RAII wrapper classes for OpenGL objects. I find such RAII wrapper classes are usually leaky abstractions on top of OpenGL anyways, and they also force me to figure out how somebody else’s wrapper classes work instead of being able to reuse my existing OpenGL knowledge to write plain reusable standard OpenGL code.

I should point out that since my Scene class is implemented only in terms of plain OpenGL objects, that makes it easy for anybody to write an OpenGL renderer that reads the contents of the tables and renders them in whatever way they see fit. One thing you might notice is that I also supply a ready-built Vertex Array Object with each mesh, which means that rendering a mesh is as simple as binding the VAO and making a DrawElements. Note you don’t have to bind any vertex buffers or the index buffer, since OpenGL’s Vertex Array Object automatically keeps a reference to the vertex buffers and index buffer that were attached to it when I loaded the model (It’s true!). For a better explanation about how vertex array objects work, check out my Vertex Specification Catastrophe slides.

glBindVertexArray(mesh.MeshVAO); glUseProgram(myProgram); glDrawElements(GL_TRIANGLES, mesh.IndexCount, GL_UNSIGNED_INT, 0); glUseProgram(0); glBindVertexArray(0);

The pinch is that OpenGL’s Vertex Array Object defines a mapping between vertex buffers and vertex shader attributes. I don’t use glGetAttribLocation to get the attribute locations, since I want to separate the mesh from the shaders used to render it. Instead, I use a convention for the attribute locations, and I establish this convention using the “preamble.glsl” file, as described in my article on GLSL Shader Live-Reloading, which allows me to establish an automatic convention for attribute locations between my C++ code and all my GLSL shaders.

You can find this source file (and the rest of the project it comes from) here: https://github.com/nlguillemot/dof/blob/master/viewer/scene.h

#pragma once

#include "opengl.h"

#include "packed_freelist.h"

#include <glm/glm.hpp>

#include <glm/gtc/quaternion.hpp>

#include <vector>

#include

<map>

#include <string>

struct DiffuseMap

{

GLuint DiffuseMapTO;

};

struct Material

{

std::string Name;

float Ambient[3];

float Diffuse[3];

float Specular[3];

float Shininess;

uint32_t DiffuseMapID;

};

struct Mesh

{

std::string Name;

GLuint MeshVAO;

GLuint PositionBO;

GLuint TexCoordBO;

GLuint NormalBO;

GLuint IndexBO;

GLuint IndexCount;

GLuint VertexCount;

std::vector<GLDrawElementsIndirectCommand> DrawCommands;

std::vector<uint32_t> MaterialIDs;

};

struct Transform

{

glm::vec3 Scale;

glm::vec3 RotationOrigin;

glm::quat Rotation;

glm::vec3 Translation;

};

struct Instance

{

uint32_t MeshID;

uint32_t TransformID;

};

struct Camera

{

// View

glm::vec3 Eye;

glm::vec3 Target;

glm::vec3 Up;

// Projection

float FovY;

float Aspect;

float ZNear;

};

class Scene

{

public:

packed_freelist<DiffuseMap> DiffuseMaps;

packed_freelist<Material> Materials;

packed_freelist<Mesh> Meshes;

packed_freelist<Transform> Transforms;

packed_freelist<Instance> Instances;

packed_freelist<Camera> Cameras;

uint32_t MainCameraID;

// allocates memory for the packed_freelists,

// since my packed_freelist doesn't have auto-resizing yet.

void Init();

};

void LoadMeshes(

Scene& scene,

const std::string& filename,

std::vector<uint32_t>* loadedMeshIDs);

void AddInstance(

Scene& scene,

uint32_t meshID,

uint32_t* newInstanceID);

As an isolated example of how to load a mesh (using tinyobjloader), I’ll leave you with some example code. I hope this helps you understand how to use the Vertex Array Object API, which is tragically poorly designed.

// Load the mesh and its materials

std::vector<tinyobj::shape_t> shapes;

std::vector<tinyobj::material_t> materials;

std::string err;

if (!tinyobj::LoadObj(shapes, materials, err,

"models/cube/cube.obj", "models/cube/"))

{

fprintf(stderr, "Failed to load cube.obj: %s\n", err.c_str());

return 1;

}

GLuint positionVBO = 0;

GLuint texcoordVBO = 0;

GLuint normalVBO = 0;

GLuint indicesEBO = 0;

// Upload per-vertex positions

if (!shapes[0].mesh.positions.empty())

{

glGenBuffers(1, &positionVBO);

glBindBuffer(GL_ARRAY_BUFFER, positionVBO);

glBufferData(GL_ARRAY_BUFFER,

shapes[0].mesh.positions.size() * sizeof(float),

shapes[0].mesh.positions.data(), GL_STATIC_DRAW);

glBindBuffer(GL_ARRAY_BUFFER, 0);

}

// Upload per-vertex texture coordinates

if (!shapes[0].mesh.texcoords.empty())

{

glGenBuffers(1, &texcoordVBO);

glBindBuffer(GL_ARRAY_BUFFER, texcoordVBO);

glBufferData(GL_ARRAY_BUFFER,

shapes[0].mesh.texcoords.size() * sizeof(float),

shapes[0].mesh.texcoords.data(), GL_STATIC_DRAW);

glBindBuffer(GL_ARRAY_BUFFER, 0);

}

// Upload per-vertex normals

if (!shapes[0].mesh.normals.empty())

{

glGenBuffers(1, &normalVBO);

glBindBuffer(GL_ARRAY_BUFFER, normalVBO);

glBufferData(GL_ARRAY_BUFFER,

shapes[0].mesh.normals.size() * sizeof(float),

shapes[0].mesh.normals.data(), GL_STATIC_DRAW);

glBindBuffer(GL_ARRAY_BUFFER, 0);

}

// Upload the indices that form triangles

if (!shapes[0].mesh.indices.empty())

{

glGenBuffers(1, &indicesEBO);

glBindBuffer(GL_ELEMENT_ARRAY_BUFFER, indicesEBO);

glBufferData(GL_ELEMENT_ARRAY_BUFFER,

shapes[0].mesh.indices.size() * sizeof(unsigned int),

shapes[0].mesh.indices.data(), GL_STATIC_DRAW);

glBindBuffer(GL_ELEMENT_ARRAY_BUFFER, 0);

}

// Hook up vertex/index buffers to a "vertex array object" (VAO)

// VAOs are the closest thing OpenGL has to a "mesh" object.

// VAOs feed data from buffers to the inputs of a vertex shader.

GLuint meshVAO;

glGenVertexArrays(1, &meshVAO);

// Attach position buffer as attribute 0

if (positionVBO != 0)

{

glBindVertexArray(meshVAO);

// Note: glVertexAttribPointer sets the current

// GL_ARRAY_BUFFER_BINDING as the source of data

// for this attribute.

// That's why we bind a GL_ARRAY_BUFFER before

// calling glVertexAttribPointer then

// unbind right after (to clean things up).

glBindBuffer(GL_ARRAY_BUFFER, positionVBO);

glVertexAttribPointer(0, 3, GL_FLOAT, GL_FALSE,

sizeof(float) * 3, 0);

glBindBuffer(GL_ARRAY_BUFFER, 0);

// Enable the attribute (they are disabled by default

// -- this is very easy to forget!!)

glEnableVertexAttribArray(0);

glBindVertexArray(0);

}

// Attach texcoord buffer as attribute 1

if (texcoordVBO != 0)

{

glBindVertexArray(meshVAO);

// Note: glVertexAttribPointer sets the current

// GL_ARRAY_BUFFER_BINDING as the source of data

// for this attribute.

// That's why we bind a GL_ARRAY_BUFFER before

// calling glVertexAttribPointer then

// unbind right after (to clean things up).

glBindBuffer(GL_ARRAY_BUFFER, texcoordVBO);

glVertexAttribPointer(1, 2, GL_FLOAT, GL_FALSE,

sizeof(float) * 2, 0);

glBindBuffer(GL_ARRAY_BUFFER, 0);

// Enable the attribute (they are disabled by default

// -- this is very easy to forget!!)

glEnableVertexAttribArray(1);

glBindVertexArray(0);

}

// Attach normal buffer as attribute 2

if (normalVBO != 0)

{

glBindVertexArray(meshVAO);

// Note: glVertexAttribPointer sets the current

// GL_ARRAY_BUFFER_BINDING as the source of data

// for this attribute.

// That's why we bind a GL_ARRAY_BUFFER before

// calling glVertexAttribPointer then

// unbind right after (to clean things up).

glBindBuffer(GL_ARRAY_BUFFER, normalVBO);

glVertexAttribPointer(2, 3, GL_FLOAT, GL_FALSE,

sizeof(float) * 3, 0);

glBindBuffer(GL_ARRAY_BUFFER, 0);

// Enable the attribute (they are disabled by default

// -- this is very easy to forget!!)

glEnableVertexAttribArray(2);

glBindVertexArray(0);

}

if (indicesEBO != 0)

{

glBindVertexArray(meshVAO);

// Note: Calling glBindBuffer(GL_ELEMENT_ARRAY_BUFFER, ebo);

// when a VAO is bound attaches the index buffer to the VAO.

// From an API design perspective, this is subtle.

glBindBuffer(GL_ELEMENT_ARRAY_BUFFER, indicesEBO);

glBindVertexArray(0);

}

// Can now bind the vertex array object to

// the graphics pipeline, to render with it.

// For example:

glBindVertexArray(meshVAO);

// (Draw stuff using vertex array object)

// And when done, unbind it from the graphics pipeline:

glBindVertexArray(0);

Exhibit B: “fictional-doodle”

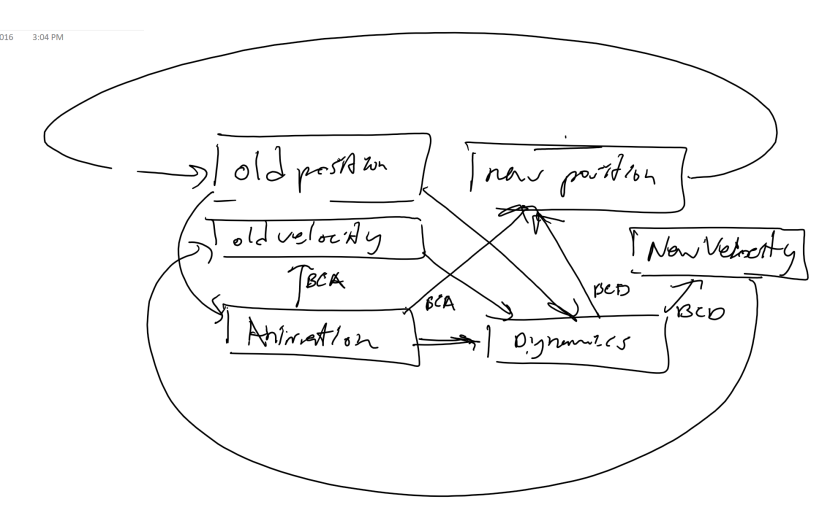

This was a course project I did with my friend Leon Senft. We wanted to load a 3D mesh and be able to animate it in two modes: skeletal animation, and ragdoll physics. This project is way bigger than the DoF project, and it was also a much earlier project before I had the proper separation of MVVM in mind. Still, from a coding perspective, the project went very smoothly. Leon and I designed the schema together, and once we figured out how we were going to interface between the animation and the ragdoll physics systems, we were able to easily work on the project in parallel.

To decide how the two animation systems should communicate, we sketched a feedback loop flowchart that explained the order in which the two systems read and wrote their data at every frame, which mapped in a straightforward way to our code since the flowchart’s reads and writes corresponded to reads and writes of our scene database’s data. Below is the flowchart copied verbatim from the OneNote document we scratched it up in.

As you can see in the code at the bottom of this section, this schema has a lot more tables. You can also see some non-clustered indexes used to lookup textures by filename, using unordered_map. We used std::vector for most of the tables, mainly because I hadn’t developed the packed_freelist class yet, and because random insertion/deletion wasn’t a requirement we had anyways.

Again, this project worked with OpenGL in terms of plain OpenGL GLuints, and we didn’t go through the trouble of arbitrarily abstracting all that stuff. This project also used shader live-reloading, but it was through a method less polished than my newer ShaderSet design. Leon was working on a Mac, so we had to deal with OpenGL 4.1, which meant we had to use glGetUniformLocation because GL 4.1 doesn’t allow shader-specified uniform locations. This made live-reloading and shader input location conventions a lot more tedious than with newer GL versions.

Speaking of Mac support, it’s really painful that Mac’s GL doesn’t support GL_ARB_debug_output (standard in GL 4.3), which allows you to get an automatic callback whenever a GL error happens. Instead, you have to call glGetError() after every API call, which is really painful. To fix this, we wrote our own OpenGL header that wraps each OpenGL call we used inside a class that defines operator() and automatically calls glGetError() after every call. You can see our implementation here It’s a bit disgusting, but it worked out well for us. I think it could be improved by wrapping the function pointers at the place where SDL_GL_GetProcAddress is called, which would make the interface more transparent.

You can find the source file below (and the rest of the project it comes from) here: https://github.com/nlguillemot/fictional-doodle/blob/master/scene.h

#pragma once

#include "opengl.h"

#include "shaderreloader.h"

#include "dynamics.h"

#include "profiler.h"

#include <glm/glm.hpp>

#include <glm/gtc/type_precision.hpp>

#include <glm/gtx/dual_quaternion.hpp>

#include <string>

#include <vector>

#include <unordered_map>

struct SDL_Window;

struct PositionVertex

{

glm::vec3 Position;

};

struct TexCoordVertex

{

glm::vec2 TexCoord;

};

struct DifferentialVertex

{

glm::vec3 Normal;

glm::vec3 Tangent;

glm::vec3 Bitangent;

};

struct BoneWeightVertex

{

glm::u8vec4 BoneIDs;

glm::vec4 Weights;

};

// Each bone is either controlled by skinned animation or the physics simulation (ragdoll)

enum BoneControlMode

{

BONECONTROL_ANIMATION,

BONECONTROL_DYNAMICS

};

// Different types of scene nodes need to be rendered slightly differently.

enum SceneNodeType

{

SCENENODETYPE_TRANSFORM,

SCENENODETYPE_STATICMESH,

SCENENODETYPE_SKINNEDMESH

};

struct SQT

{

// No S, lol. md5 doesn't use scale.

glm::vec3 T;

glm::quat Q;

};

// Used for array indices, don't change!

enum SkinningMethod

{

SKINNING_DLB, // Dual quaternion linear blending

SKINNING_LBS // Linear blend skinning

};

// Bitsets to say which components of the animation changes every frame

// For example if an object does not rotate then the Q (Quaternion) channels are turned off, which saves space.

// When a channel is not set in an animation sequence, then the baseFrame setting is used instead.

enum AnimChannel

{

ANIMCHANNEL_TX_BIT = 1, // X component of translation

ANIMCHANNEL_TY_BIT = 2, // Y component of translation

ANIMCHANNEL_TZ_BIT = 4, // Z component of translation

ANIMCHANNEL_QX_BIT = 8, // X component of quaternion

ANIMCHANNEL_QY_BIT = 16, // Y component of quaternion

ANIMCHANNEL_QZ_BIT = 32 // Z component of quaternion

// There is no quaternion W, since the quaternions are normalized so it can be deduced from the x/y/z.

};

// StaticMesh Table

// All unique static meshes.

struct StaticMesh

{

GLuint MeshVAO; // vertex array for drawing the mesh

GLuint PositionVBO; // position only buffer

GLuint TexCoordVBO; // texture coordinates buffer

GLuint DifferentialVBO; // differential geometry buffer (t,n,b)

GLuint MeshEBO; // Index buffer for the mesh

int NumIndices; // Number of indices in the static mesh

int NumVertices; // Number of vertices in the static mesh

int MaterialID; // The material this mesh was designed for

};

// Skeleton Table

// All unique static skeleton definitions.

struct Skeleton

{

GLuint BoneEBO; // Indices of bones used for rendering the skeleton

glm::mat4 Transform; // Global skeleton transformation to correct bind pose orientation

std::vector<std::string> BoneNames; // Name of each bone

std::unordered_map<std::string, int> BoneNameToID; // Bone ID lookup from name

std::vector<glm::mat4> BoneInverseBindPoseTransforms; // Transforms a vertex from model space to bone space

std::vector<int> BoneParents; // Bone parent index, or -1 if root

std::vector<float> BoneLengths; // Length of each bone

int NumBones; // Number of bones in the skeleton

int NumBoneIndices; // Number of indices for rendering the skeleton as a line mesh

};

// BindPoseMesh Table

// All unique static bind pose meshes.

// Each bind pose mesh is compatible with one skeleton.

struct BindPoseMesh

{

GLuint SkinningVAO; // Vertex arrays for input to skinning transform feedback

GLuint PositionVBO; // vertices of meshes in bind pose

GLuint TexCoordVBO; // texture coordinates of mesh

GLuint DifferentialVBO; // differential geometry of bind pose mesh (n,t,b)

GLuint BoneVBO; // bone IDs and bone weights of mesh

GLuint EBO; // indices of mesh in bind pose

int NumIndices; // Number of indices in the bind pose

int NumVertices; // Number of vertices in the bind pose

int SkeletonID; // Skeleton used to skin this mesh

int MaterialID; // The material this mesh was designed for

};

// AnimSequence Table

// All unique static animation sequences.

// Each animation sequence is compatible with one skeleton.

struct AnimSequence

{

std::string Name; // The human readable name of each animation sequence

std::vector<SQT> BoneBaseFrame; // The base frame for each bone, which defines the initial transform.

std::vector<uint8_t> BoneChannelBits; // Which animation channels are present in frame data for each bone

std::vector<int> BoneFrameDataOffsets; // The offset in floats in the frame data for this bone

std::vector<float> BoneFrameData; // All frame data for each bone allocated according to the channel bits.

int NumFrames; // The number of key frames in this animation sequence

int NumFrameComponents; // The number of floats per frame.

int SkeletonID; // The skeleton that this animation sequence animates

int FramesPerSecond; // Frames per second for each animation sequence

};

// AnimatedSkeleton Table

// Each animated skeleton instance is associated to an animation sequence, which is associated to one skeleton.

struct AnimatedSkeleton

{

GLuint BoneTransformTBO; // The matrices used to transform the bones

GLuint BoneTransformTO; // Texture descriptor for the palette

GLuint SkeletonVAO; // Vertex array for rendering animated skeletons

GLuint SkeletonVBO; // Vertex buffer object for the skeleton vertices

int CurrAnimSequenceID; // The currently playing animation sequence for each skinned mesh

int CurrTimeMillisecond; // The current time in the current animation sequence in milliseconds

float TimeMultiplier; // Controls the speed of animation

bool InterpolateFrames; // If true, interpolate animation frames

std::vector<glm::dualquat> BoneTransformDualQuats; // Skinning palette for DLB

std::vector<glm::mat3x4> BoneTransformMatrices; // Skinning palette for LBS

std::vector<BoneControlMode> BoneControls; // How each bone is animated

// Joint physical properties

std::vector<glm::vec3> JointPositions;

std::vector<glm::vec3> JointVelocities;

};

// SkinnedMesh Table

// All instances of skinned meshes in the scene.

// Each skinned mesh instance is associated to one bind pose, which is associated to one skeleton.

// The skeleton of the bind pose and the skeleton of the animated skeleton must be the same one.

struct SkinnedMesh

{

GLuint SkinningTFO; // Transform feedback for skinning

GLuint SkinnedVAO; // Vertex array for rendering skinned meshes.

GLuint PositionTFBO; // Positions created from transform feedback

GLuint DifferentialTFBO; // Differential geometry from transform feedback

int BindPoseMeshID; // The ID of the bind pose of this skinned mesh

int AnimatedSkeletonID; // The animated skeleton used to transform this mesh

};

// Ragdoll Table

// All instances of ragdoll simulations in the scene.

// Each ragdoll simulation is associatd to one animated skeleton.

struct Ragdoll

{

int AnimatedSkeletonID; // The animated skeleton that is being animated physically

std::vector<Constraint> BoneConstraints; // all constraints in the simulation used to stop the skeleton from separating

std::vector<glm::ivec2> BoneConstraintParticleIDs; // particle IDs used in the bone distance constraints

std::vector<glm::ivec3> JointConstraintParticleIDs; // particles IDs used in the joint angular constraints

std::vector<Hull> JointHulls; // the collision hulls associated to every joint

};

// DiffuseTexture Table

struct DiffuseTexture

{

bool HasTransparency; // If the alpha of this texture has some values < 1.0

GLuint TO; // Texture object

};

// SpecularTexture Table

struct SpecularTexture

{

GLuint TO; // Texture object

};

// NormalTexture Table

struct NormalTexture

{

GLuint TO; // Texture object

};

// Material Table

// Each material is associated to DiffuseTextures (or -1 if not present)

// Each material is associated to SpecularTextures (or -1 if not present)

// Each material is associated to NormalTextures (or -1 if not present)

struct Material

{

std::vector<int> DiffuseTextureIDs; // Diffuse textures (if present)

std::vector<int> SpecularTextureIDs; // Specular textures (if present)

std::vector<int> NormalTextureIDs; // Normal textures (if present)

};

struct TransformSceneNode

{

// Empty node with no purpose other than making nodes relative to it

};

struct StaticMeshSceneNode

{

int StaticMeshID; // The static mesh to render

};

struct SkinnedMeshSceneNode

{

int SkinnedMeshID; // The skinned mesh to render

};

// SceneNode Table

struct SceneNode

{

glm::mat4 LocalTransform; // Transform relative to parent (or relative to world if no parent exists)

glm::mat4 WorldTransform; // Transform relative to world (updated from RelativeTransform)

SceneNodeType Type; // What type of node this is.

int TransformParentNodeID; // The node this node is placed relative to, or -1 if none

union

{

TransformSceneNode AsTransform;

StaticMeshSceneNode AsStaticMesh;

SkinnedMeshSceneNode AsSkinnedMesh;

};

};

struct Scene

{

std::vector<StaticMesh> StaticMeshes;

std::vector<Skeleton> Skeletons;

std::vector<BindPoseMesh> BindPoseMeshes;

std::vector<AnimSequence> AnimSequences;

std::vector<AnimatedSkeleton> AnimatedSkeletons;

std::vector<SkinnedMesh> SkinnedMeshes;

std::vector<Ragdoll> Ragdolls;

std::vector<DiffuseTexture> DiffuseTextures;

std::unordered_map<std::string, int> DiffuseTextureNameToID;

std::vector<SpecularTexture> SpecularTextures;

std::unordered_map<std::string, int> SpecularTextureNameToID;

std::vector<NormalTexture> NormalTextures;

std::unordered_map<std::string, int> NormalTextureNameToID;

std::vector<Material> Materials;

std::vector<SceneNode> SceneNodes;

// Skinning shader programs that output skinned vertices using transform feedback.

std::vector<const char*> SkinningOutputs;

ReloadableShader SkinningDLB{ "skinning_dlb.vert" };

ReloadableShader SkinningLBS{ "skinning_lbs.vert" };

ReloadableProgram SkinningSPs[2];

GLint SkinningSP_BoneTransformsLoc;

// Scene shader. Used to render objects in the scene which have their geometry defined in world space.

ReloadableShader SceneVS{ "scene.vert" };

ReloadableShader SceneFS{ "scene.frag" };

ReloadableProgram SceneSP{ &SceneVS, &SceneFS };

GLint SceneSP_ModelWorldLoc;

GLint SceneSP_WorldModelLoc;

GLint SceneSP_ModelViewLoc;

GLint SceneSP_ModelViewProjectionLoc;

GLint SceneSP_WorldViewLoc;

GLint SceneSP_CameraPositionLoc;

GLint SceneSP_LightPositionLoc;

GLint SceneSP_WorldLightProjectionLoc;

GLint SceneSP_DiffuseTextureLoc;

GLint SceneSP_SpecularTextureLoc;

GLint SceneSP_NormalTextureLoc;

GLint SceneSP_ShadowMapTextureLoc;

GLint SceneSP_IlluminationModelLoc;

GLint SceneSP_HasNormalMapLoc;

GLint SceneSP_BackgroundColorLoc;

// Skeleton shader program used to render bones.

ReloadableShader SkeletonVS{ "skeleton.vert" };

ReloadableShader SkeletonFS{ "skeleton.frag" };

ReloadableProgram SkeletonSP{ &SkeletonVS, &SkeletonFS };

GLint SkeletonSP_ColorLoc;

GLint SkeletonSP_ModelViewProjectionLoc;

// Shadow shader program to render vertices into the shadow map

ReloadableShader ShadowVS{ "shadow.vert" };

ReloadableShader ShadowFS{ "shadow.frag" };

ReloadableProgram ShadowSP{ &ShadowVS, &ShadowFS };

GLint ShadowSP_ModelLightProjectionLoc;

// true if all shaders in the scene are compiling/linking successfully.

// Scene updates will stop if not all shaders are working, since it will likely crash.

bool AllShadersOK;

// Camera placement, updated each frame.

glm::vec3 CameraPosition;

glm::vec4 CameraQuaternion;

glm::mat3 CameraRotation; // updated from quaternion every frame

glm::vec3 BackgroundColor;

// The camera only reads user input when it is enabled.

// Needed to implement menu navigation without the camera moving due to mouse/keyboard action.

bool EnableCamera;

// The current skinning method used to skin all meshes in the scene

SkinningMethod MeshSkinningMethod;

// For debugging skeletal animations

bool ShowBindPoses;

bool ShowSkeletons;

// Placing the hellknight (for testing)

int HellknightTransformNodeID;

glm::vec3 HellknightPosition;

bool IsPlaying;

bool ShouldStep;

// Damping coefficient for ragdolls

// 1.0 = rigid body

float RagdollBoneStiffness;

float RagdollJointStiffness;

float RagdollDampingK;

float Gravity;

glm::vec3 LightPosition;

Profiler Profiling;

// Exponential weighted moving averages for profiling statistics

std::unordered_map<std::string,float> ProfilingEMAs;

};

void InitScene(Scene* scene);

void UpdateScene(Scene* scene, SDL_Window* window, uint32_t deltaMilliseconds);

Renderer

The whole point of the Scene was to store the data of the scene you want to render, without having to specify exactly how it should be rendered. This gives you the freedom of being able to implement different renderers for the same scene data, which is useful for when you’re making a bunch of different OpenGL projects. You can easily implement a new rendering technique without even having to touch the scene loading code, which is nice.

My implementation of Renderer usually has three member functions: Init(), Resize(width, height), and Render(Scene*). Init() does the one-time setup of renderer data, Resize() deletes and recreates all textures that need to be sized according to the screen, and Render() traverses the Scene and renders the data inside it.

I usually begin the program by calling Init() followed by an initial Resize(), which creates all the screen-sized textures for the first time. The code inside Resize() deletes and recreates all textures when the window gets resized, and since deleting is a no-op for null textures it’s safe to call it even after the first Init(). After that, Render() is just called at every frame to render the scene. Before calling Render(), I might also call an Update() method that does any necessary simulation work, like if I want to run some game logic. You can also be lazy and write that simulation update code in the Render(), whatever makes sense.

Your renderer probably has to maintain some data that is used to implement whatever specific rendering algorithm you have in mind. This data is what constitutes the viewmodel. I usually don’t separate the viewmodel into its own class, but I guess you could if you somehow find that useful. The “view” itself is basically the Render() function, which uses the model and viewmodel to produce a frame of video.

Below is an example of part of the Resize() function inside the “DoF” project’s renderer (which you can see here: https://github.com/nlguillemot/dof/blob/master/viewer/renderer.cpp) As you can see, I’m deleting and recreating a color and depth texture that need to be sized according to the window, and I also recreate the FBO used to render with them. As you can see, I’m still sticking to my guns about writing plain standard OpenGL code.

glDeleteTextures(1, &mBackbufferColorTOSS);

glGenTextures(1, &mBackbufferColorTOSS);

glBindTexture(GL_TEXTURE_2D, mBackbufferColorTOSS);

glTexStorage2D(GL_TEXTURE_2D, 1, GL_SRGB8_ALPHA8, mBackbufferWidth, mBackbufferHeight);

glBindTexture(GL_TEXTURE_2D, 0);

glDeleteTextures(1, &mBackbufferDepthTOSS);

glGenTextures(1, &mBackbufferDepthTOSS);

glBindTexture(GL_TEXTURE_2D, mBackbufferDepthTOSS);

glTexStorage2D(GL_TEXTURE_2D, 1, GL_DEPTH_COMPONENT32F, mBackbufferWidth, mBackbufferHeight);

glBindTexture(GL_TEXTURE_2D, 0);

glDeleteFramebuffers(1, &mBackbufferFBOSS);

glGenFramebuffers(1, &mBackbufferFBOSS);

glBindFramebuffer(GL_FRAMEBUFFER, mBackbufferFBOSS);

glFramebufferTexture2D(GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT0, GL_TEXTURE_2D, mBackbufferColorTOSS, 0);

glFramebufferTexture2D(GL_FRAMEBUFFER, GL_DEPTH_ATTACHMENT, GL_TEXTURE_2D, mBackbufferDepthTOSS, 0);

GLenum fboStatus = glCheckFramebufferStatus(GL_FRAMEBUFFER);

if (fboStatus != GL_FRAMEBUFFER_COMPLETE) {

fprintf(stderr, "glCheckFramebufferStatus: %x\n", fboStatus);

}

glBindFramebuffer(GL_FRAMEBUFFER, 0);

The code I write inside the Scene loading and inside Init() and Resize() tries to be careful about binding. I always set my bindings back to zero after I’m done working with an object, since I don’t want to leak any state. This is especially important for stuff like Vertex Array Objects, which interacts in highly non-obvious ways with the buffer bindings (see the mesh loading example code earlier in this article.)

When writing code in the Render() function, keeping your state clean becomes more difficult, since you probably are going to set a bunch of OpenGL pipeline state to get the effects you want. One approach to this problem is to glGet the old state, set your state, do your stuff, then set the old state back. This is maybe a best-effort approach for OpenGL middleware, but I really hate having to type all the glGet stuff. For this reason, I generally write code that assumes the pipeline is in its default state, and I make sure to re-set the pipeline to its default state at the end of my work. This leaves me with no surprises. Notice that this is yet another place where I try to express everything in terms of plain standard OpenGL code, since that makes it easier to see which pipeline state is set where, which makes it easier to simply read the code and see that all the state for a block of code is set and reset properly.

As an example of Render(), consider the following bit of code that renders all the models in the scene:

glBindFramebuffer(GL_FRAMEBUFFER, mBackbufferFBOMS);

glViewport(0, 0, mBackbufferWidth, mBackbufferHeight);

glClearColor(100.0f / 255.0f, 149.0f / 255.0f, 237.0f / 255.0f, 1.0f);

glClearDepth(0.0f);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

Camera& mainCamera = mScene->Cameras[mScene->MainCameraID];

glm::vec3 eye = mainCamera.Eye;

glm::vec3 up = mainCamera.Up;

glm::mat4 V = glm::lookAt(eye, mainCamera.Target, up);

glm::mat4 P;

{

float f = 1.0f / tanf(mainCamera.FovY / 2.0f);

P = glm::mat4(

f / mainCamera.Aspect, 0.0f, 0.0f, 0.0f,

0.0f, f, 0.0f, 0.0f,

0.0f, 0.0f, 0.0f, -1.0f,

0.0f, 0.0f, mainCamera.ZNear, 0.0f);

}

glm::mat4 VP = P * V;

glUseProgram(*mSceneSP);

glUniform3fv(SCENE_CAMERAPOS_UNIFORM_LOCATION, 1, value_ptr(eye));

glEnable(GL_DEPTH_TEST);

glDepthFunc(GL_GREATER);

glEnable(GL_FRAMEBUFFER_SRGB);

for (uint32_t instanceID : mScene->Instances)

{

const Instance* instance = &mScene->Instances[instanceID];

const Mesh* mesh = &mScene->Meshes[instance->MeshID];

const Transform* transform = &mScene->Transforms[instance->TransformID];

glm::mat4 MW;

MW = translate(-transform->RotationOrigin) * MW;

MW = mat4_cast(transform->Rotation) * MW;

MW = translate(transform->RotationOrigin) * MW;

MW = scale(transform->Scale) * MW;

MW = translate(transform->Translation) * MW;

glm::mat3 N_MW;

N_MW = mat3_cast(transform->Rotation) * N_MW;

N_MW = glm::mat3(scale(1.0f / transform->Scale)) * N_MW;

glm::mat4 MVP = VP * MW;

glUniformMatrix4fv(SCENE_MW_UNIFORM_LOCATION, 1, GL_FALSE, value_ptr(MW));

glUniformMatrix3fv(SCENE_N_MW_UNIFORM_LOCATION, 1, GL_FALSE, value_ptr(N_MW));

glUniformMatrix4fv(SCENE_MVP_UNIFORM_LOCATION, 1, GL_FALSE, value_ptr(MVP));

glBindVertexArray(mesh->MeshVAO);

for (size_t meshDrawIdx = 0; meshDrawIdx < mesh->DrawCommands.size(); meshDrawIdx++)

{

const GLDrawElementsIndirectCommand* drawCmd = &mesh->DrawCommands[meshDrawIdx];

const Material* material = &mScene->Materials[mesh->MaterialIDs[meshDrawIdx]];

glActiveTexture(GL_TEXTURE0 + SCENE_DIFFUSE_MAP_TEXTURE_BINDING);

if (material->DiffuseMapID == -1)

{

glBindTexture(GL_TEXTURE_2D, 0);

glUniform1i(SCENE_HAS_DIFFUSE_MAP_UNIFORM_LOCATION, 0);

}

else

{

const DiffuseMap* diffuseMap = &mScene->DiffuseMaps[material->DiffuseMapID];

glBindTexture(GL_TEXTURE_2D, diffuseMap->DiffuseMapTO);

glUniform1i(SCENE_HAS_DIFFUSE_MAP_UNIFORM_LOCATION, 1);

}

glUniform3fv(SCENE_AMBIENT_UNIFORM_LOCATION, 1, material->Ambient);

glUniform3fv(SCENE_DIFFUSE_UNIFORM_LOCATION, 1, material->Diffuse);

glUniform3fv(SCENE_SPECULAR_UNIFORM_LOCATION, 1, material->Specular);

glUniform1f(SCENE_SHININESS_UNIFORM_LOCATION, material->Shininess);

glDrawElementsInstancedBaseVertexBaseInstance(

GL_TRIANGLES,

drawCmd->count,

GL_UNSIGNED_INT, (GLvoid*)(sizeof(uint32_t) * drawCmd->firstIndex),

drawCmd->primCount,

drawCmd->baseVertex,

drawCmd->baseInstance);

}

glBindVertexArray(0);

}

glBindTextures(0, kMaxTextureCount, NULL);

glDisable(GL_FRAMEBUFFER_SRGB);

glDepthFunc(GL_LESS);

glDisable(GL_DEPTH_TEST);

glUseProgram(0);

glBindFramebuffer(GL_FRAMEBUFFER, 0);

As you can see, I set the state at the start of this rendering pass, then reset it back to its default at the end. Since it’s just one block of code, I can easily double-check the code to make sure that there’s an associated reset for every set. There are also some shortcuts to use, for example you can use “glBindTextures(0, 32, NULL);” to unbind all textures.

There are still some parts of OpenGL state which I don’t bother to reset. First, I don’t reset the viewport, since the viewport’s default value is mostly arbitrary anyways (the size of the window). Instead, I adopt the convention that one should always set the viewport immediately after setting a framebuffer. I’ve seen too many bugs of people forgetting to set the viewport before rendering anyways, so I’d rather make it a coding convention not to assume what the viewport is. There is no default viewport in D3D12 anyways, so this convention makes sense moving forward. Another example is the clear color and clear depth, which I always set to the desired values before calling glClear (again, you have to do this in newer APIs too, including GL 4.5’s DSA).

Another example of state I don’t bother to reset is the uniforms I set on my programs. OpenGL does remember what uniforms have been set to a program, but I choose to have the convention that one should not rely on this caching. Instead, you should by convention set every uniform a program has between calling glUseProgram() and glDraw*(). Anyways, D3D12 also forces you to re-upload all your root parameters (uniforms) every time you switch root signature (the program’s interface for bindings), so this convention also makes sense moving forward.

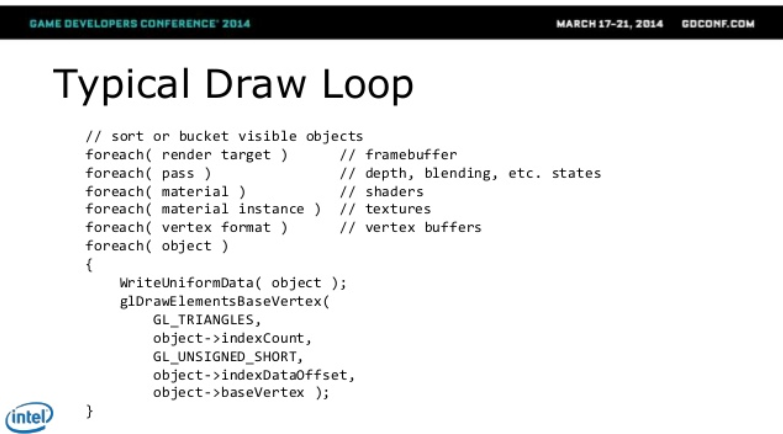

There also exist more automatic solutions to preventing binding leakage. If you’re able to define your rendering code as a series of nested loops (see below), then you can be sure that you’re setting all necessary state before each further nested loop, and you can reset the state all together in one place. Another way to design these nested loops is using sort-based draw call bucketing, which you can use to automatically iterate over all the draw calls in your program in an order that respects which state changes are expensive and which ones aren’t. Since that design also loops over draw calls in order of state changes, you can again have some guarantees about the lifetime of your state changes, so you can reset any state at a place that produces the least waste. This was approximately the design we used in fictional-doodle’s renderer, which you can find here: https://github.com/nlguillemot/fictional-doodle/blob/master/renderer.cpp#L86

Conclusions and Future Work

The details of all this stuff is not super important, and the amount of engineering effort you should invest depends on the scale of your projects. Since I’m usually just implementing graphics techniques in isolation, I don’t have to worry too much about highly polished interfaces. I’ve enjoyed the benefits of being able to reuse Scene code, and I’ve been able to quickly write new Renderers by copy and pasting another one for some base code. My projects that separate the Scene and Renderer have so far been enjoyable, straightforward, and productive to work on, so I consider this design successful.

One part where this starts to crack is if you’re writing a project that implements a whole bunch of rendering techniques in one project, like if you’re trying to compare a whole bunch of different rendering techniques. You can probably get away with stuffing everything into the Scene and Renderer, but there’s a certain threshold where you can no longer keep the whole project in your mind at the same time, and it becomes important to limit the set of data that a given function can access to better understand the code. You might be able to get away with grouping up the model or viewmodel data into different structs, whatever helps keep you sane.

Another problem which I haven’t addressed much is multi-threading. OpenGL projects generally don’t worry much about multi-threading since the OpenGL API abstracts almost all that stuff away from you. This abstraction is partly what makes OpenGL a good API for prototyping rendering techniques, but in the future I’d like to apply this design to newer APIs like Vulkan and Direct3D 12. I suspect that I’ll have to identify which data varies “per-frame” (in order to have multiple frames in flight), while using some encapsulation to prevent one frame to read the data that belongs to another frame, or to avoid global state in general. I also suspect that the design of Render() will have to change to allow multi-threaded evaluation (to write commands faster using CPU parallelism), something which I’m currently looking at TBB flow graph to find a solution.

Appendix A: Transformation Hierarchies

It’s commonly desired to have a hierarchy of transforms to render a scene, sometimes called a “scene graph”. In practice, you shouldn’t think too hard about the whole scene graph thing, since that mindset has a lot of problems. If you do want to implement a tree of transforms, I would recommend keeping the links between transforms separate from the transforms themselves. For example, you might have a table for all the transforms, a table for mesh instances, and a table for meshes. Each mesh instance then keeps a foreign key to a transform and mesh that represent it. To support relative transformations, I suggest creating a new table that stores the edges of the transformation tree, meaning each object in the table stores the foreign keys of two transforms: The parent and the child.

The goal of this design is to compute the absolute transforms of each object at each frame, based on their relative transforms (in the transforms table) and the edges between transforms (in the transform edge table). To do this, make a copy of the local transforms array, then iterate over the transform edges in partial-order based on the height in the tree of the child of each edge. By iterating over the edges in this order to propagate transforms, you’ll always update the parent of a node before its children, meaning the transforms will propagate properly from top to bottom of the tree. You can then use these absolute transforms as a model matrix to render your objects.

By keeping the edges between the transforms in their own table, the cost of the propagation will be proportional to the number of edges, rather than the number of nodes. This is nice if you have fewer edges than nodes, which is likely if your tree is mostly flat. What I mean is that scene tree designs can easily end up with 1 root node with a bazillion children, which is just useless, and is avoided in this design. This also keeps the data structure for your scene nice and flat, without having to have a recursive scene node data structure (like many scene graph things end up).

Then again, I didn’t apply this in the examples earlier in this article, and everything still turned out ok. Pick the design that makes sense for your workload.

Appendix B: User Interface

You probably want some amount of GUI to work with the renderer. Hands down, the easiest solution is to use Dear ImGui. You can easily integrate it into your OpenGL project by copy-pasting the code from one of their example programs. ImGui is used in the dof program mentioned earlier in this article, so you can consult how I used it there too if you’re interested.

Working with cameras is also important for 3D programming of course. Programming a camera is kinda tedious, so I have some ready-made libraries for that too. You can use flythrough_camera to have a camera that lets you fly around the 3D world, and you can use arcball_camera to have a camera that lets you rotate around the scene. Both of these libraries are a single C file you can just add to your project, and you use them by calling an immediate-mode style update function once per frame. These libraries tend to integrate naturally.

Appendix C: Loading OpenGL Functions

One of my pet peeves with typical OpenGL programs is that people use horribly over-engineered libraries like GLEW in order to dynamically link OpenGL functions. You can do something way simpler if you only need to use Core GL and the few extra nonstandard functions that are actually useful. What I did was write a script that read contents of Khronos’ official glcorearb.h header, and produces my own opengl.h and opengl.cpp files. The header declares extern function pointers to all OpenGL entry points, and opengl.cpp uses SDL’s SDL_GL_GetProcAddress function to load all the functions.

To use it, you just have to add those two files to your project, call OpenGL_Init() once after creating your OpenGL context, and you’re good to go. No need to link an external library (like glew), and no need to deal with all of glew’s cruft. You can find the files here: opengl.h and opengl.cpp. You can easily modify them by hand if you want to adapt them to glfw. Since these files are so simple, they’re much more “hackable”, allowing you to do things like wrapping calls in glGetError (like was done in the “fictional-doodle” project, as I explained earlier).

As a bonus, this header also defines GLDrawElementsIndirectCommand and GLDrawArraysIndirectCommand, which are not in the standard GL headers but exist only in the GL documentation for indirect rendering. You can use these structs to pass around draw arguments (perhaps storing them once up front in your Scene) or use them in glDrawIndirect calls.

Awesome post! Really enjoyed it. The database approach for the Scene is a really interesting concept, I’ve come across a similar idea before while reading this – http://www.dataorienteddesign.com/dodmain/ – might be of interest if you haven’t seen it before! It’s really nice to see a concrete use case of a prototyping style renderer without trying to have everything and the kitchen sink style approach. Thank you for sharing!

LikeLike

Could you elaborate on the below paragraph and perhaps provide a code sample?

“To do this, make a copy of the local transforms array, then iterate over the transform edges in partial-order based on the height in the tree of the child of each edge. By iterating over the edges in this order to propagate transforms, you’ll always update the parent of a node before its children”

LikeLike

The algorithm to arrange the nodes in that order is called topological sort. It’s relatively complex, so it’s mostly useful as a preprocessing step for parts of the scenegraph that are static. For example, MD5Mesh stores the skeleton hierarchy in this order, which makes updating the skeleton very efficient. I haven’t thought much about if there’s a good way to do runtime updates to the scenegraph while keeping it in partial order. It’s probably possible, but it’s also not necessarily a bad idea to split the static and dynamic parts of the scenegraph and use different approaches.

LikeLike

Got it! I totally misunderstood and thought you meant there was some magic to allow fast runtime sorting :p

Thanks for clearing that up!

Great article, keep up the good work!

LikeLike

Hi, very helpful article.

I understand the implementation of your packed_freelist collection and it makes sense to me that the packed array is really optimized for fast iterations, but in your code section here:

const Instance* instance = &mScene->Instances[instanceID];

const Mesh* mesh = &mScene->Meshes[instance->MeshID];

const Transform* transform = &mScene->Transforms[instance->TransformID];

It looks like there is random indexing between multiple packed arrays causing cache misses. Are the cache misses intrinsic to this design or am I missing something here.

Again, Thanks for the great article.

LikeLike

In some scenarios you can guarantee that the order of traversal is the same as order in memory, like for example sorting a skeleton joint hierarchy in order of parent to child. If the data is more dynamic and flexible then that’s probably more challenging to achieve.

Another possible solution is that you could “optimize” the locality of the traversal in the code you showed by batching it in multiple passes. For example, if you get the list of all transform IDs you want to do the operation on and sort it ahead of time.

At the end of the day I think you just have to look at what assumptions/constraints you have for your specific use case to design a solution that exploits those assumptions and constraints.

LikeLike